Core Concepts

This page describes the main concepts used in prompt management in agenta.

Prompt and Configuration Management

What Is Prompt Management?

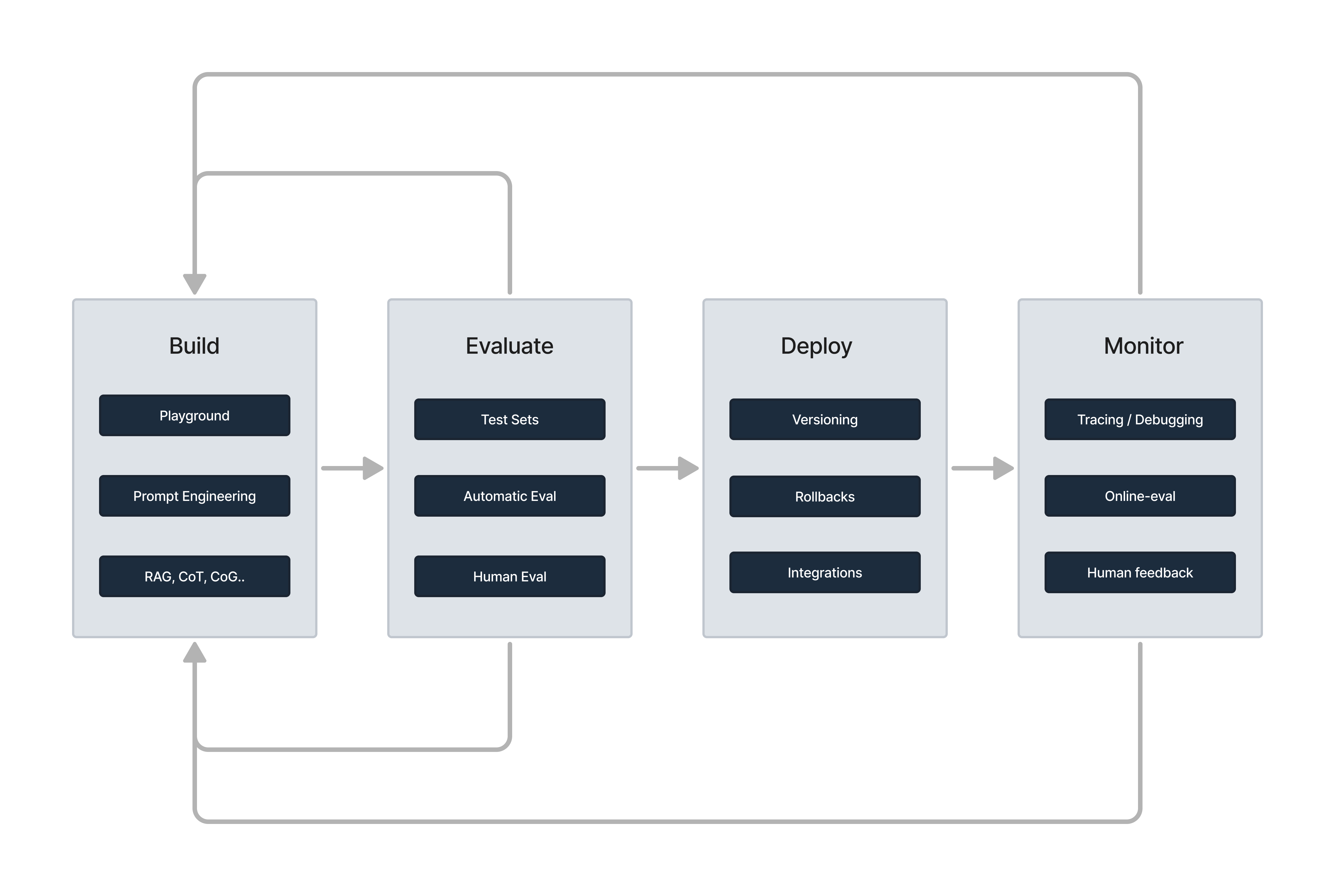

Building LLM-powered applications is an iterative process. In each iteration, you aim to improve the application's performance by refining prompts, adjusting configurations, and evaluating outputs.

A prompt management system provide you the tools to do this process systematically by:

- Versioning Prompts: Keeping track of different prompts you've tested.

- Linking Prompt Variants to Experiments: Connecting each prompt variant to its evaluation metrics to understand the effect of changes and determine the best variant.

- Publishing Prompts: Providing an environment to publish the best variants to production and maintain a history of changes in production systems.

- Associating Prompts with Traces: Monitoring how changes in prompts affect production metrics.

Why Do I Need a Prompt Management System?

A prompt management system enable everyone on the team—from product owners to subject matter experts—to collaborate in creating prompts. Additionally it helps you answer the following questions:

- Which prompts have we tried?

- What were the outputs of these prompts?

- How do the evaluation results of these prompts compare?

- Which prompt was used for a specific generation in production?

- What was the effect of deploying the new version of this prompt in production?

- Who on the team made changes to a particular prompt in production?

What Is the Difference Between Prompt and Configuration Management?

Prompts are a special case of configurations. Most LLM applications have more parameters than just the prompt, model, and model configuration. For example, if your application involves a chain of two prompts, you should version the two prompts together since they are interdependent, rather than versioning each one separately.

Configuration management encompasses all the parameters and settings that define how your LLM application operates, including prompts, model settings, and other workflow parameters. For instance, a RAG application would have top_k and embedding as part of its configuration, while a chain of prompts would have the two prompts with their model parameters as its config.

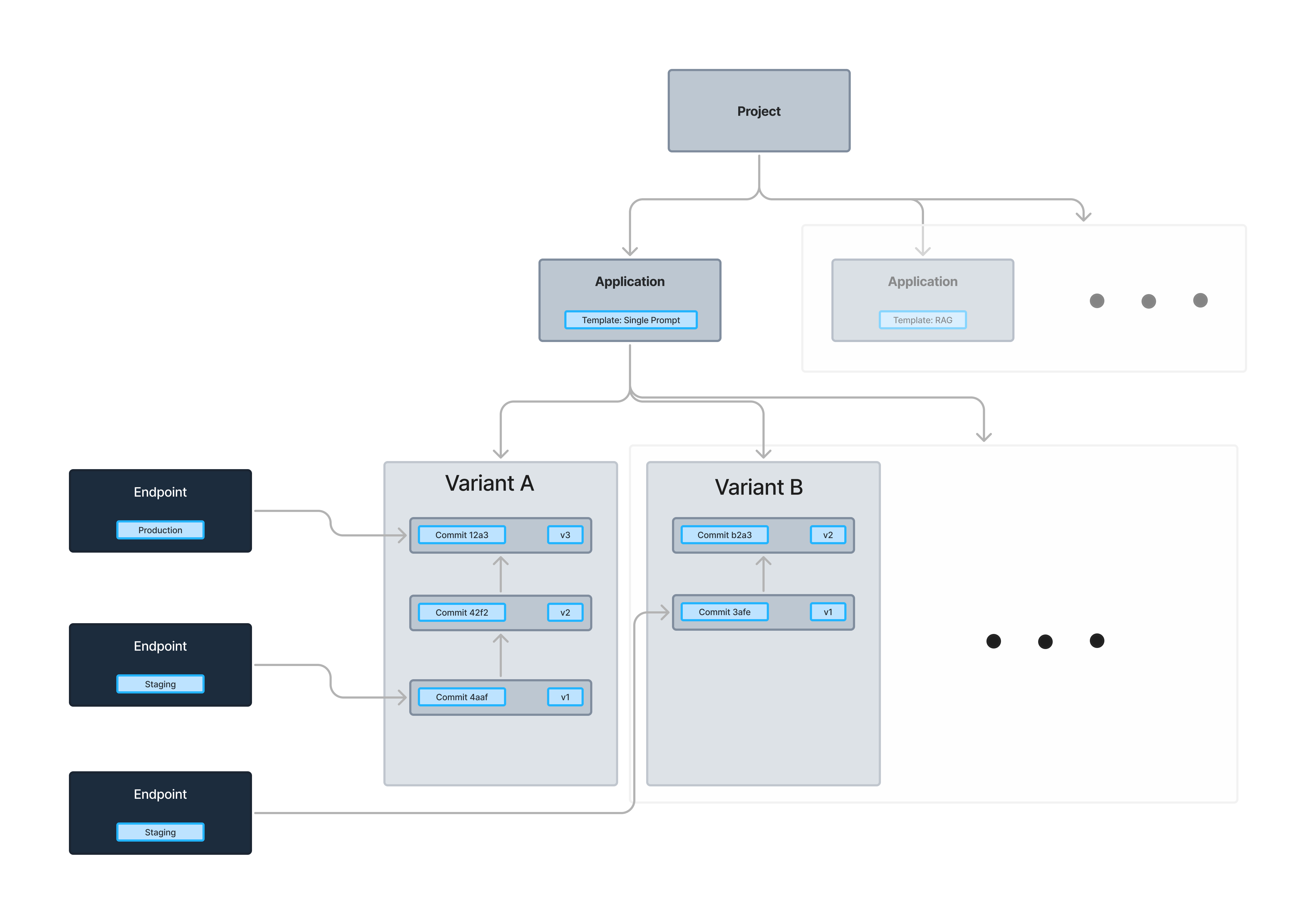

Taxonomy in agenta

Templates

Templates are the workflows used by LLM-powered applications. Agenta comes with two default templates:

- Completion Application Template: For single-prompt applications that generate text completions.

- Chat Application Template: For applications that handle conversational interactions.

Agenta also allows you to create custom templates for your workflows using our SDK. Examples include:

- Retrieval-Augmented Generation (RAG) Applications:

- Chains of Multiple Prompts:

- Agents Interacting with External APIs:

After creating a template, you can interact with it in the playground, run no-code evaluations, and publish versions all from the webUI.

Applications

An application uses a template to solve a specific use case. For instance, an application could use the single-prompt template for tasks like:

- Tweet Generation: Crafting engaging tweets based on input topics.

- Article Summarization: Condensing long articles into key points.

Variants

Within each application, you can create variants. Variants are different configurations of the application, allowing you to experiment with and compare multiple approaches. For example, for the "tweet generation" application, you might create variants that:

- Use different prompt phrasings.

- Adjust model parameters like temperature or maximum tokens.

- Incorporate different styles or tones (e.g., professional vs. casual).

Versions

Every variant is versioned and immutable. When you make changes to a variant, a new version is created. Each version has a commit id that uniquely identifies it.

Deployments

Deployments are published versions of your variants. You can commit a version of a variant to a deployment. You can the integrate your code base to fetch the configuration deployed on that environment. Additionally, you can can directly call the endpoint containing the applications running with that configuration.

Application per default come with three different deployments :

- Development: For initial testing and experimentation.

- Staging: For pre-production testing and quality assurance.

- Production: For live use with real users.

When publishing a variant to an environment the last version of that variant get published. Each deployment points to a specific version of a variant (a certain commit), updating the variant after publishing does not update the environment.